Jiayi Pan

潘家怡

University of California, Berkeley

Hi 👋

I am a first-year PhD student at Berkeley AI Research. I work with Alane Suhr at Berkeley NLP Group.

I enjoy understanding and making things. Recently, I am most excited about evaluating and post-training (multi-modal) language models as decision-making agents.

In 2019-2023, I was a happy undergrad at the University of Michigan and Shanghai Jiao Tong University where I worked with Joyce Chai, Dmitry Berenson, and Fan Wu.

I continuously reassess my lifestyle/objectives. Feedback is always welcome :)

Selected Awards/Honors:

Publications & Manuscripts

OpenDevin Community. Preprint.

Hao Bai*, Yifei Zhou*, Mert Cemri, Jiayi Pan, Alane Suhr, Sergey Levine, Aviral Kumar. Preprint.

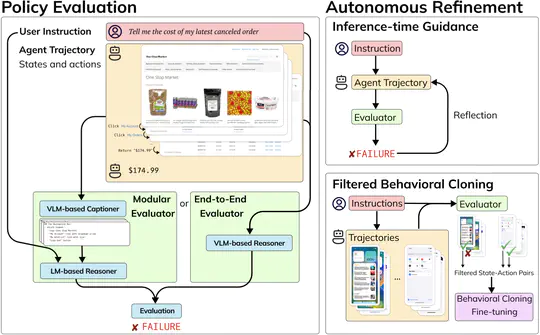

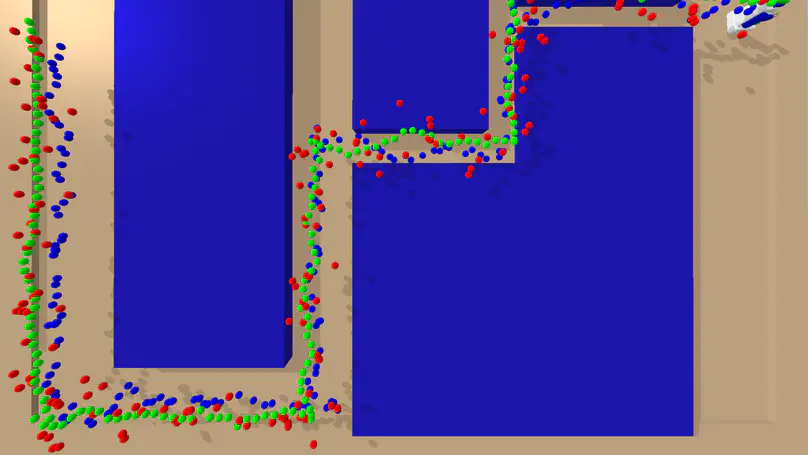

Jiayi Pan, Yichi Zhang, Nickolas Tomlin, Yifei Zhou, Sergey Levine, Alane Suhr. COLM 2024 / ⭐️ MAR Workshop @ CVPR 2024 Best Paper.

Yuexiang Zhai, Hao Bai*, Zipeng Lin*, Jiayi Pan*, Shengbang Tong*, Yifei Zhou*, Alane Suhr, Saining Xie, Yann LeCun, Yi Ma, Sergey Levine. Preprint 2024.

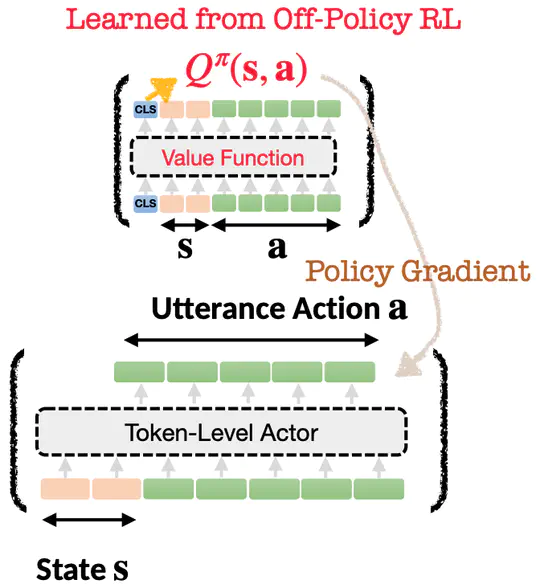

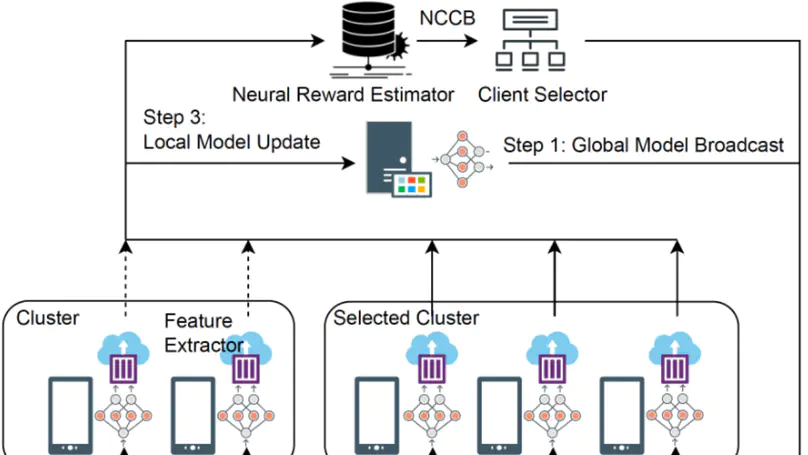

Yifei Zhou, Andrea Zanette, Jiayi Pan, Sergey Levine, Aviral Kumar. ICML 2024.

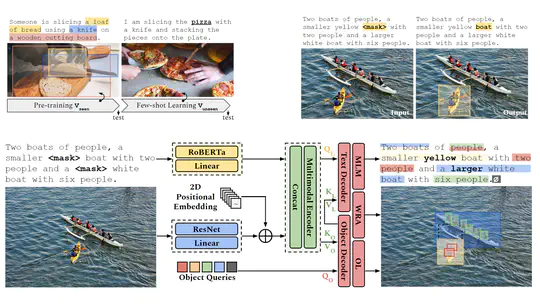

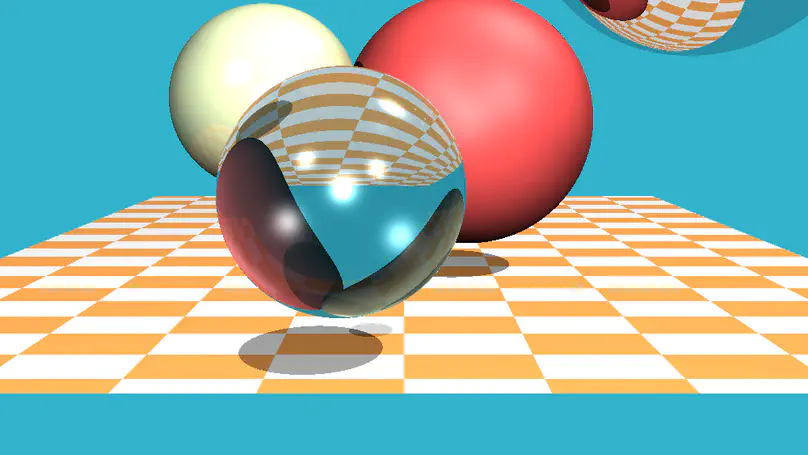

Sihan Xu*, Yidong Huang*, Jiayi Pan, Ziqiao Ma, Joyce Chai. CVPR 2024.

Yichi Zhang, Jiayi Pan, Yuchen Zhou, Rui Pan, Joyce Chai. EMNLP 2023.

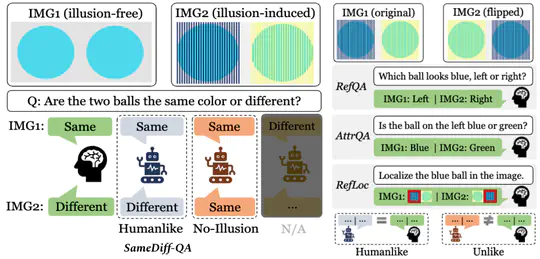

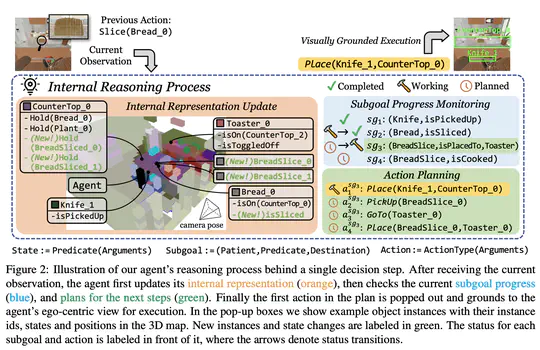

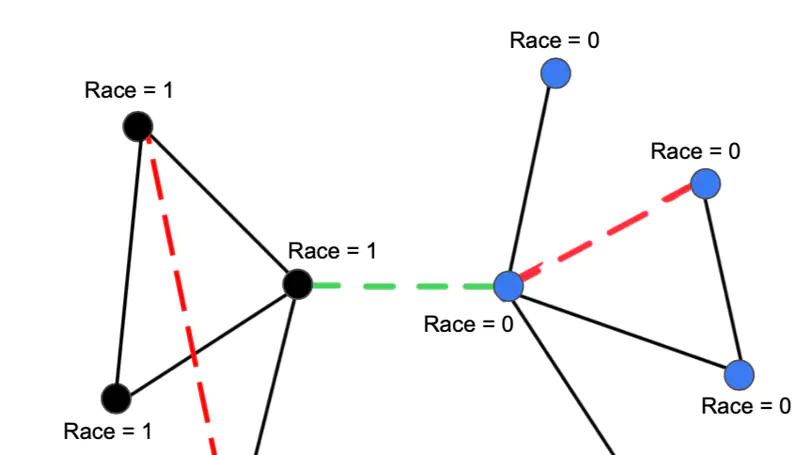

Ziqiao Ma*, Jiayi Pan*, Joyce Chai. ⭐️ ACL 2023 Outstanding Paper.

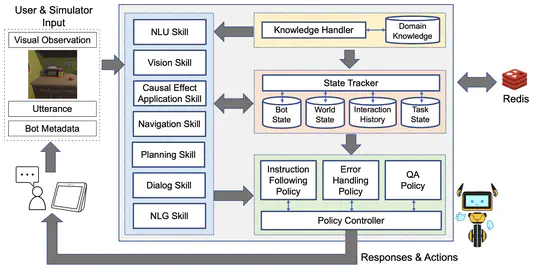

Team SEAGULL at UMich. 🏆 1st Place in the inaugural Alexa Prize SimBot Challenge.

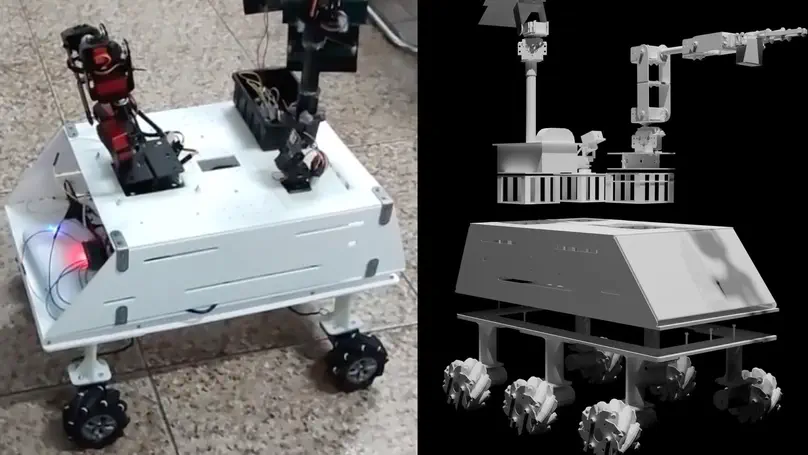

Jiayi Pan, Glen Chou, Dmitry Berenson. ICRA 2023.

Yichi Zhang, Jianing Yang, Jiayi Pan, Shane Storks, Nikhil Devraj, Ziqiao Ma, Keunwoo Peter Yu, Yuwei Bao, Joyce Chai. EMNLP 2022, Oral.

Side Projects

Contact

- Email: jiayipan [AT] berkeley [DOT] edu

Misc

- I recently started to track what I consume and learn from. You can find them here.

- I try to develop some habits. Currently, I am learning guitar and music theory.

- Growing up, I lived in quite a few places: Chongqing, Xinyang, Chengdu, Shanghai, Ann Arbor, and now the Bay Area.

- These days, I think a lot about how to align my research with a positive, counterfactual impact on the near future where AGI becomes a reality.

- Before doing AI research, I was quite into physics and participated in the Physics Olympiad during my high school years (although I wasn’t exceptionally strong). I still occasionally read physics books.

- I am a big fan of Elden Rings and Hollow Knight. I also play League of Legends with friends sometime.